Whether for business or personal use, an obstacle to exploiting AI is that the space is fraught with data privacy challenges and dilemmas. Nobody wants their personal life (and surely not their employer's intellectual property) ending up inadvertently as part of a training dataset or an advertising profile. For all their utility, hosted LLM services like ChatGPT, Gemini, and Microsoft CoPilot leave me with a feeling that I am constantly being watched by companies that already know too much about me.

That's what makes LM Studio so compelling. It provides a chatGPT-like GUI for the llama.cpp inference library, letting you download models to run on your local CPU. I've been a fan of llama.cpp for a while, so it's cool to see it get such bundled up with so many features in such an accessible way.

This article will discuss some of the features I have found most useful so far, as well as getting into the licensing (spoiler alert: it's proprietary).

For those who are wondering, yes, it does run on Electron, unfortunately. I say "unfortunately" because Electron tends to be a resource hog, and the lost ram is especially detrimental for this application because the models themselves are likely already going to be straining system memory resources for a lot of users. When I was trying out the original llama.cpp on a 8 gb MacBook air, a gig or two of extra memory usage would have been tough (their docs do admit that they built the app for ~16gb ram minimum). However, before you label me a hater, I appreciate that they were able to ship a solid app on Windows, Mac, and Linux

Local RAG

Retrieval-augmented generation is naturally the kind of thing that's valuable to run locally. If you have an information advantage, you are surely leaking it when you send sensitive data to an external service that might train models on it.

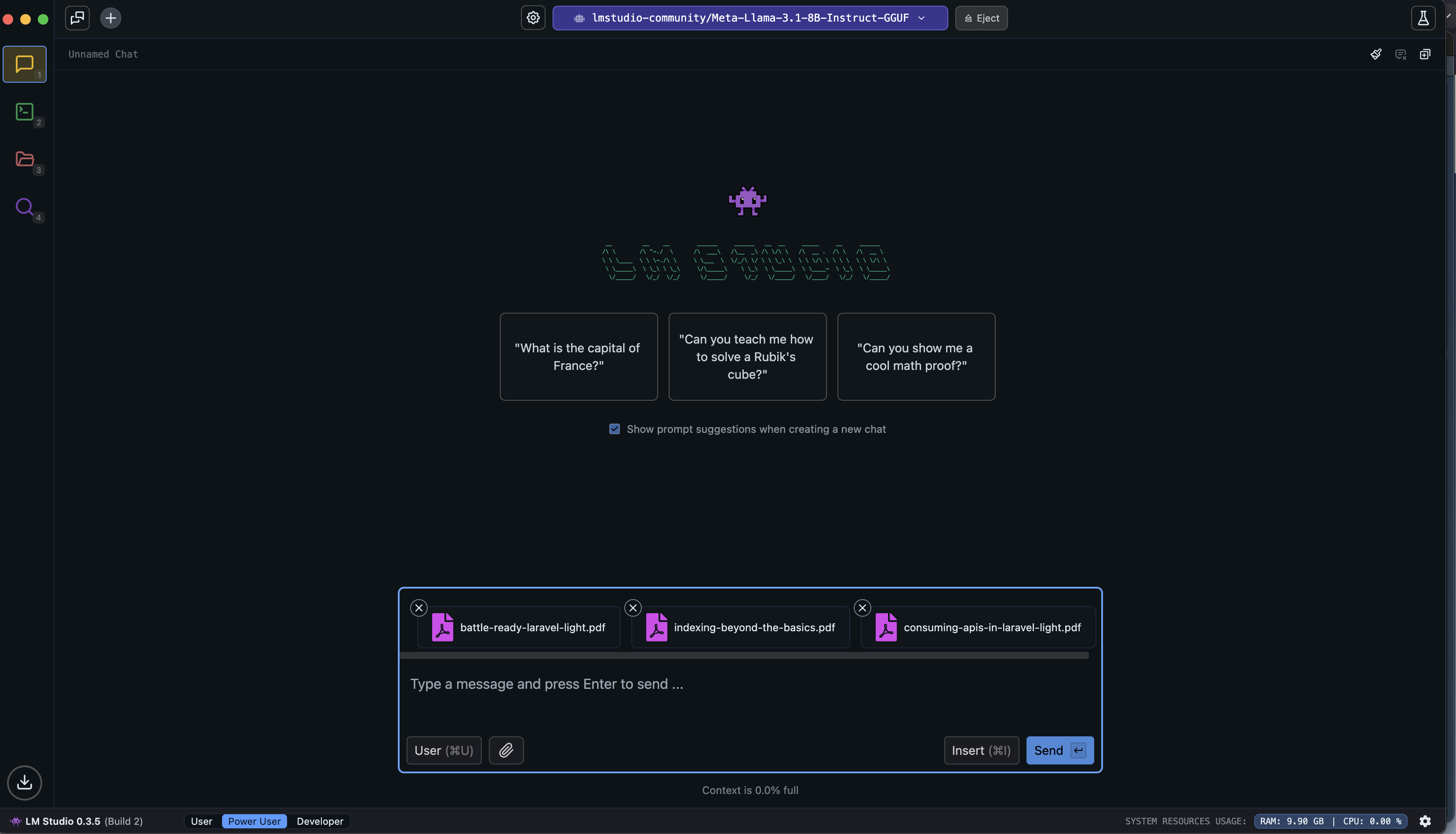

From a utility perspective, it would be cool if we could do rag on more documents at once, but for now I like the convenience of this RAG on-demand feature. Naturally, I bought some programming books on Black Friday and had to try loading them into a RAG session in LM studio.

This RAG system was definitely able to answer various questions for me about my documents with a llama 3 variant. It was a time-efficient exercise between setting up and actually getting answers in chat. I also like that it was able to kind of go "hey, that's the end of what's been talked about this in the source you gave me, but in general, blah blah blah."

Other Cool Features

- Various levels of verbosity/detail in the UI: choose from a user, power user, or developer level (don't clutter the UI with features that you don't need)

- Built-in OpenAI compatible api endpoint you can spin up with local models for dev experiments

- It actually shows you what percent of the context is full (this is a major UX issue with ChatGPT and a lot of other commercial AI offerings)

- Adjustable context length (RAM dependent)

- Supports gguf models on all devices and mlx on Mac

- Supports multimodal models w/vision like LLAVA (this can be very valuable if you are on the free version of ChatGPT and hit limits quickly with vision usage)

I think showing the user the context window explicitly is a game-changer. One of the classic worst parts of using ChatGPT and other LLM experiences is that you don't get any warning when they start forgetting stuff due to the chat getting too long. Sure, you can always have ChatGPT summarize the current context to minimize lost information, but you have to know when to do this without anything telling when you need to do it. Great solution. When I'm chatting with a model in LM studio, seeing the % context filled gives me urgency to make leaps in solving problems before the model forgets stuff.

Licensing- the Downside?

While LM Studio is free as in free beer, it is indeed proprietary software. There are 2 important takeaways:

- It's only free for personal use; to use it at work, you need to contact them and presumably start some sales process

- Since we can't look at the code, we have to have trust (a word I hate in terms of most privacy and security contexts) that they aren't collecting data

I don't like when companies do this thing where you have to talk to them to get a price! It's desktop software! Give me a number and let me consider if it adds enough value.

Anyway, regarding my point #2 above, I think it's actually a good thing that LM Studio is in the business of selling premium software to business users. They actually have much less inherent incentive to store/use sensitive data than AIaaS providers. But notice I didn't say no incentive. I've never heard of proprietary software that doesn't try to report "telemetry" (a broad term) somehow, so you might want to turn off your wifi when you are using LM Studio if you are paranoid.

Evaluating the Value

The value of an offering like this must be compared to something like ChatGPT Enterprise, OpenAI's high-end product. And unlike that kind of product, LM Studio does not provide the compute. Your org might need to invest in some heavier duty laptops for your employees if you want them all using this.

While I again have no idea how much business use of LM Studio costs (let me know in the comments if you do!), Nomic's competitor GPT4ALL has an enterprise tier that starts at $25 per device per month at the time of writing. That's actually the same as ChatGPT business right now too. It seems to be a competitive space right now.

As these LLM technologies have flourished over the last couple years, I've tried out countless bleeding-edge open source Python AI projects and wasted countless hours of my life and mental energy fighting all sorts of little issues with stuff like docker, venv, yaml configuration files, and all sorts of other jazz. If you're leading a technical team, compare the costs of this software versus the costs of a high-friction setup (expecting your team to keep some combination of open-source AI components duck-taped together with shell scripts in addition to their full times jobs)

Concluding thoughts

We seem to be entering an era in AI where the most exciting models aren't necessarily coming out of openAI anymore, and LM Studio seems to be a decent UI to stay in the action. I like that the installation process was turnkey and I was ready to download models and get started rather quickly.

I'm also going to go out there and say that I think there is actually a lot of moral value in withholding training data from companies like Google and OpenAI who try to get AI regulated so they can keep others out. It's a blatant attempt at regulatory capture, and the stakes are too high not to fight back a little.

Overall, LM studio has been a fun product to use in my experience so far. With its RAG capabilities, I could see it providing a lot of value for orgs that prioritize privacy.

What do you use to run LLMs on your local machines? What do you think of LM Studio? Let me know in the comments!

Dec 2025 Update: I still use LM Studio occasionally (but not daily or weekly) when there is some new type of model I feel like trying or if there's anything really privacy-sensitive I need to do with AI that I don't want to expose to OpenAI or Anthropic. This is roughly a year after I initially published this article, so I think that is a good sign in terms of LM Studio being adaptable but low-maintenance (for users) as various parts of the tech ecosystem advance.

As for things I use daily at this point, I use chatGPT and Github Copilot through my IDEs PHPStorm and RStudio. Which does validate the premise of this article... in general there is reason to be concerned about the amount of data we are exposing to OpenAI, Anthropic, and Google.