Honestly, a few weeks ago, I barely understood vector databases (still mostly accurate at this point tbh) and didn’t think “people like me” (someone without a CS degree) would be using them for at least a few more months. This week, however, after trying out my new (refurbished/old) MacBook Pro with Auto-GPT running on JSON memory, I quickly found myself wanting a vector database to hook it up to instead. I found myself in a conundrum.

This article isn’t going to be a comprehensive tutorial because:

- It doesn’t seem like anybody really knows enough about prompting Auto-GPT yet to write really good guides (that they’re willing to share)

- I’m not an expert in everything I’m talking about here, so my time is better spend posting links that hopefully send people in the right direction

The Problem with Pinecone

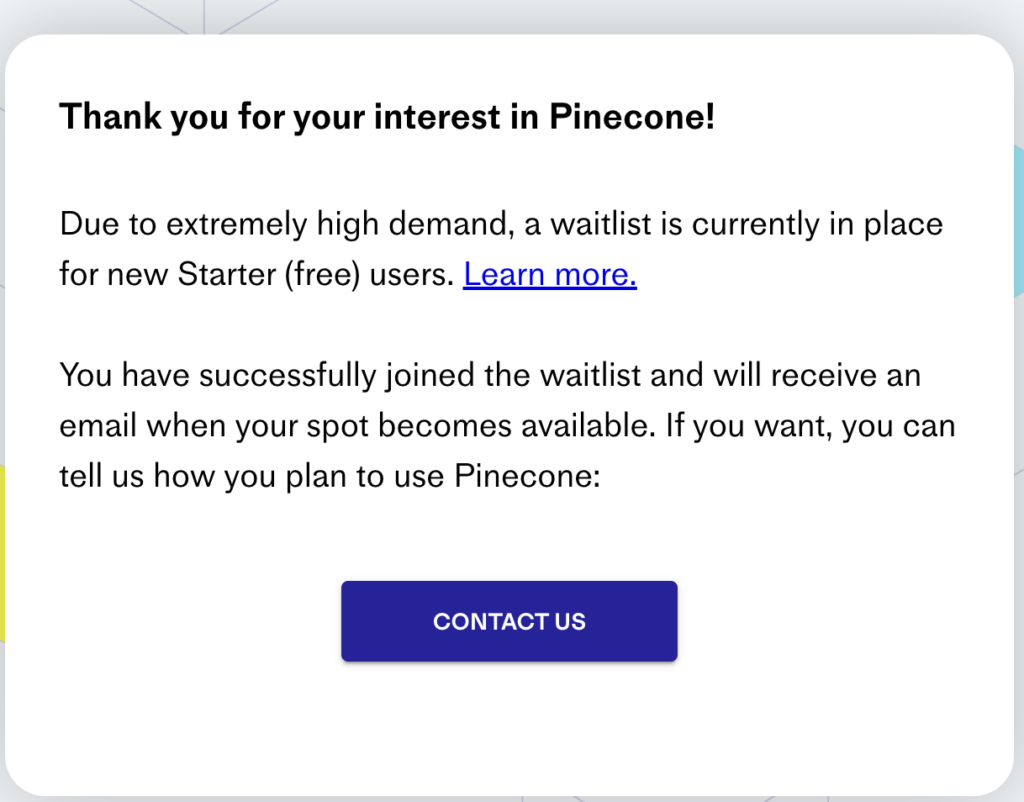

Oof. Well, I guess I snoozed and lost. I actually put that on my Snapchat story, and people probably thought it was kind of weird.

In one of the youtube videos I was watching about Auto-GPT, some guy who had “never done anything with Python or coding before” was talking about putting his Pinecone API keys in the .env file. No hate. Every generation has different ways of getting introduced to Python. Whatever the reasons, the end result is that I, an experienced database professional, am unable to develop with Pinecone at the moment or use it at all. We can’t run it locally anyway. Clearly, there’s a need for alternatives.

Redis in Docker: My Immediate Solution

Redis isn’t a vector database, but it’s an upgrade over over using a JSON for AI agent memory. And actually, this is my first time using it. Luckily, the readme page for Auto-GPT on GitHub has good instructions for setting up the .env file to use redis.

With Docker Desktop installed, spinning up Redis is as easy as running:

docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest

Milvus: an Open-Source Vector Database for the Long-Term?

As of the time of writing, it appears that Milvus support is in the works for Auto-GPT, but it isn’t in the stable branch yet (look at the readme.md on the unstable “master” branch).

Milvus is an open-source database with both hosted and local installation options. This offers more flexibility, and I naturally like having the option of running an advanced vector db locally.

The installation guide may look slightly intimidating since you have to use Kubernetes for the process, but I found it well-written. I decided it was worthwhile to give it a shot in preparation of Auto-GPT officially supporting it in the future, and I had no problem following the guide despite having close to zero previous experience with Kubernetes. With Docker Desktop already installed on my 2017 i7 MacBook Pro (16gb ram), installing kubectl was a breeze.

Even though I’m not using it with Auto-GPT yet, I’m glad I went through this process. I have a cutting-edge vector database installed and ready to go (hopefully), and I got an easy Kubernetes lesson in the process.

Update: I didn't actually like using Milvus with Auto-GPT. I found Weaviate easier to configure for this use case.